Artificial intelligence (AI) is increasingly at the forefront of conversations concerning technology, communication and the arts. But now, as AI pioneer Geoffrey Hinton, speaking to Reuters says, AI might become an issue “more urgent” than even climate change.

Hinton, known as the “godfather of AI” announced his resignation from Google in a statement to the New York Times on May 1. Hinton was a top computer scientist at the tech firm for over 10 years but now wants to “talk about the dangers of AI without considering how this impacts Google,” as he said in a Tweet following his statement.

Hinton, a computer scientist and cognitive psychologist, studies neural artificial intelligence networks and deep learning; a technique programmed into machines that allows them to learn by example and do things that humans are able to do. His work is pioneering and paved the way for recent AI developments such as OpenAI’s ChatGPT.

But now, Hinton is part of a growing number of tech experts publicly expressing concerns about the danger artificial intelligence could pose if it ever gains greater intelligence than mankind. At the end of March, experts (including Elon Musk and Apple co-founder Steve Wozniak) signed an open letter asking for AI training to be paused in some capacity for at least six months to give society time to adapt to a rise in increasingly intelligent AI.

The letter, from the Future of Life Institute, reads: “AI systems with human-competitive intelligence can pose profound risks to society and humanity.”

Just how worried should we be about artificial intelligence?

How artificial inteIligence is changing society

Scandals such as the Cambridge Analytica scandal during the 2016 presidential election have made people more sceptical of giving over personal information to AI. The scandal involved the collection of over 50 million Facebook profiles from a data breach in order for the analytics teams behind the Trump administration and the winning Brexit campaign to target voters.

Speaking in an online interview, Hinton tells the BBC that AI will “allow authoritarian leaders to manipulate their elections.”

Data collected and processed by AI offers high risk to individuals’ rights and freedom because companies have so far had no real reason or incentive to build privacy protection into their systems.

Privacy in the AI context is also challenging to regulate without stifling the development and progress of artificial intelligence. There are already so few regulations on both traditional privacy protection and the added complication AI processing data makes digital privacy an even bigger concern.

Data bias is another big issue that stems from the programming of AI. This is when data used to train the AI is incomplete, skewed or otherwise biased – for example, the data is wrong, excludes a demographic or was collected in a deceitful way.

The way in which humanity interacts with AI is also changing and becoming uncertain.

Last month, a photo generated entirely by AI won in the creative open category at the Sony World Photography Awards. The winner later revealed that he would not be accepting the prize and that he entered to see if the competitions were ready to distinguish between AI-made entries and genuine photography.

Also in April, an anonymous music producer released a song called “Heart on My Sleeve” with lyrics supposedly by Canadian rapper, Drake and Canadian singer, The Weeknd. The song was made entirely by AI and enjoyed instant success on TikTok with one user commenting: “This is better than any Drake song.” So where does AI in art leave already established artists?

Following the success of “Heart on My Sleeve” and the subsequent online discourse about the legal and creative consequences, music producer Grimes, winner of eight awards for her music including a Harper’s Bazaar Woman of the year award, Tweeted that she would split the royalties with any successful AI song using her voice. She even unveiled new software to help users do this. She is also the former girlfriend of Elon Musk.

I'll split 50% royalties on any successful AI generated song that uses my voice. Same deal as I would with any artist i collab with. Feel free to use my voice without penalty. I have no label and no legal bindings. pic.twitter.com/KIY60B5uqt

— 𝖦𝗋𝗂𝗆𝖾𝗌 ⏳ (@Grimezsz) April 24, 2023

Speaking to Pitchfork, Holly Herndon, an American composer who has led conversations on artificial intelligence in music and unveiled her own AI music software in 2021, wrote of her software: “vocal deepfakes are here to stay. A balance needs to be found between protecting artists, and encouraging people to experiment with a new and exciting technology.”

Again, questions are raised about what regulations can be placed on AI without limiting people from exploring new technologies. Writing about her software, Holly+, Herndon described it as “communal voice ownership.” It seems as if this might be the best way the music industry can adapt to AI.

Related articles: Can Artificial Intelligence Help Us Speak to Animals?, Should Artificial Intelligence Be Allowed Human-like Feelings?, AI vs. Artists: Who Can Claim Creativity?, Google vs. ChatGPT: Who Really Knows Best?

Whilst AI in the arts is legally frustrating, it is relatively innocuous compared to other ways in which AI is interacting with humanity. From self-driving Uber cars killing pedestrians to chess robots breaking the finger of its opponents, AI can present a very real physical threat.

Because AI is not a real person, there are also legal complications. Who should be charged if AI malfunctions and it leads to harm? In the case of the self-driving Uber car, the human back-up driver was charged as he had been watching something on his phone at the time. For the chess robot, it was his opponent, a seven year old boy, who was found to be at fault because he took his turn too fast – a violation of safety rules, according to Sergey Smagin, vice-president of the Russian Chess Federation.

So is it simply a case of operating AI properly that will keep it safe for human use? This sets a dangerous precedent should AI become more intelligent than humans.

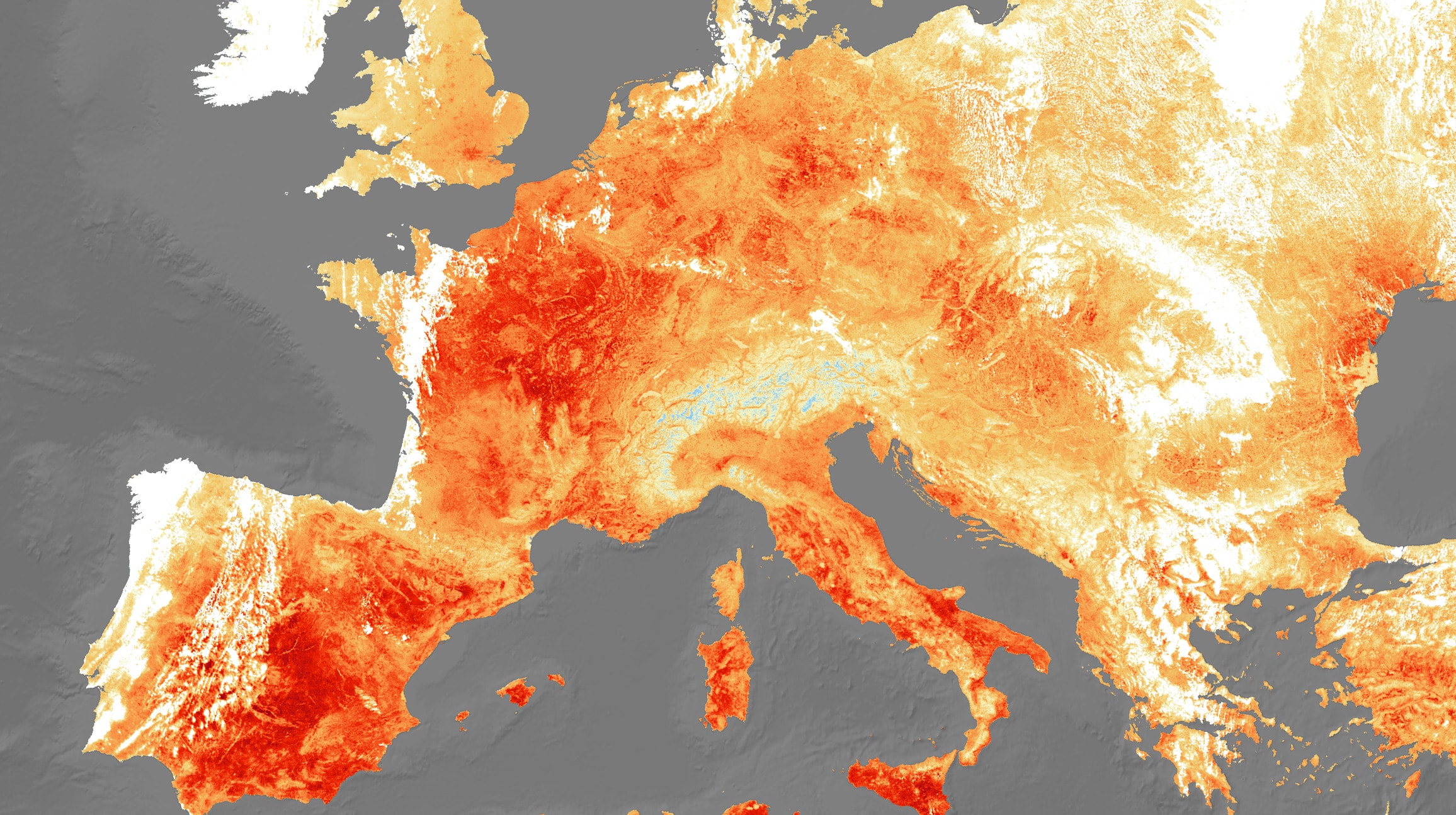

AI and the climate

Geoffrey Hinton disagreed with those that signed the letter to pause the training of AI. He said to Reuters, “it’s utterly unrealistic.”

He goes on to say “I’m in the camp that thinks this is an existential risk, and it’s close enough that we ought to be working very hard right now, and putting a lot of resources into figuring out what we can do about it.”

In terms of climate change Hinton says: “I wouldn’t like to devalue climate change. I wouldn’t like to say, ‘You shouldn’t worry about climate change.’ That’s a huge risk too,” Hinton said. “But I think this might end up being more urgent.”

Hinton argues that we know what needs to be done to prevent and mitigate the effects of the climate crisis, but this is not the case with AI.

“With climate change, it’s very easy to recommend what you should do: you just stop burning carbon. If you do that, eventually things will be okay. For this it’s not at all clear what you should do,” said Hinton.

The future effects of artificial intelligence are still not clear. From the affects on people and legal resulting complications. AI and the uncertainty surrounding it is much more difficult to find solutions to, especially with the difficulties in regulating it.

Speaking to the BBC, Hinton said that “the big difference is that with digital systems, you have many copies of the same set of weights, the same model of the world. And all these copies can learn separately but share their knowledge instantly,” going on to say “that’s how these chatbots can know so much more than any one person.”

It’s becoming increasingly apparent that AI technologies need better regulations to protect users. Potentially the only way to keep society safe from artificial intelligence in the future is to limit the extent to which it can be developed and taught.

Editor’s Note: The opinions expressed here by the authors are their own, not those of Impakter.com — In the Featured Photo: A robot playing the piano. Featured Photo Credit: Unsplash.