Language accessibility has always been at the heart of who gets the help, who gets heard, and who gets left behind. Now, with the help of artificial intelligence, there is already technology available that can mimic a human voice, deliver human-speaking capability, and also translate video speech from any language. When paired with systems that can translate video, AI begins to resemble a digital interpreter, one that never sleeps and never runs out of languages. For the 75% of global internet users who prefer content in their native tongue, this is a revolutionary shift in accessibility.

For vulnerable communities, it has great appeal. Vulnerable groups, such as refugees learning to cope with unfamiliar systems, older citizens disoriented by too rapid a digital transition, and individuals with speech difficulties, all may be better off because of it. However, voice cloning also carries risks that cut deeper for those already exposed to harm. The ethical question is not whether the technology works (it clearly does) but whether it can be used without exploiting the very people it claims to support.

The 2025 Voice Preservation Landscape

AI Voice cloning uses a machine learning model to produce speech with similar characteristics, such as tone, pitch, and emotional inflection. The market for voice cloning in translation was projected to reach $1 billion in 2025, growing at a compound annual growth rate of 42%.

This opens very personalized modes of communication. Someone who may be losing their voice to diseases such as ALS can now “bank” their voice. Several organizations, including Team Gleason and Bridging Voice, have teamed up with tech leaders to distribute free voice cloning licenses to thousands of ALS patients, securing their identity. As many as 95% of those with ALS may lose this vital form of communication, making this “digital legacy” a lifeline for mental health and family bonding.

Humanitarian Innovation and Shadow AI

At the 2025 AI for Good Global Summit, it was disclosed that 93% of the staff in humanitarian organizations today use AI-based services in their daily work. It gives affected people access to health services, education, and legal services. But, as pointed out in the report, shadow AI poses a problem in terms of individual use without oversight because it raises important issues in governance and privacy.

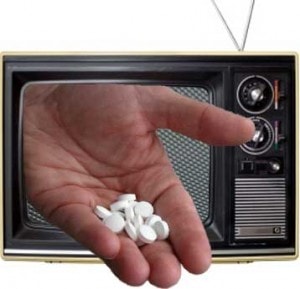

As we employ technology to assist in the translation of video content for refugees, we may be working with highly sensitive biometric information. For instance, if voice cloning is carried out in the creation of an announcement aimed at helping the humanitarian cause, and the data is not properly protected, it may be abused by malicious individuals through what is termed “vishing,” which means voice phishing. The realistic nature of voice-cloning technology has made it the scammers’ choice; thus, according to a 2025 report, 25% of adults have fallen victim to an AI voice scam.

The Problem of Linguistic Privilege

A truly ethical digital interpreter must address linguistic diversity issues. Studies conducted in April 2025 highlight a substantial “accent bias” in synthetic speaking platforms. When trained on Western inputs, AI models tend to promote linguistic privilege, performing worse on accents of regional English, including Indian, Nigerian, or Ethiopian, versus standard accents from the UK or the USA.

As far as vulnerable groups are concerned, this implies that this technology is likely to flatten their cultural identity or, worse, misunderstand their needs in case of a disaster. Accessibility is not achieved by having everyone sound the same. It is achieved by having the AI reflect the user’s real vocal heritage.

The Four Pillars of Ethical Deployment

In order to make AI voice cloning a force for good, the industry has shifted towards the framework of Responsible Innovation that focuses on the following areas:

- Informed, Reversible Consent: A person’s use of their voice must be clearly understood. The ELVIS Act in Tennessee in 2024 established a global precedent in becoming the first legislation to protect an individual’s voice as a property right.

- Universal Transparency: Listeners must be alerted when they are listening to a synthetic voice. This is being implemented by companies such as Resemble AI, which are starting to integrate PerTH watermarking, adding invisible signatures to the audio to confirm it is synthetic.

- Governance Beyond Technology: The pace of policy needs to keep up. Currently, as of late 2025, 57 countries are considering laws related to AI, with a focus on accountability and the right to be forgotten, ensuring that a voice clone doesn’t live longer than it’s supposed to.

- Community-Led Design: Vulnerable groups should not only be considered “users” but also makers of AI. Ethical dataset creation should come from the community to make the AI system understand the variations in speech patterns in the global community.

Conclusion: Translating Intent into Care

The zero-defect interface that needs to be made accessible to vulnerable communities is not about achieving technological purity; it’s about resilience. Voice cloning can be that connector that can open boundaries when we realize that biometric privacy has the same inviolability as medical privacy.

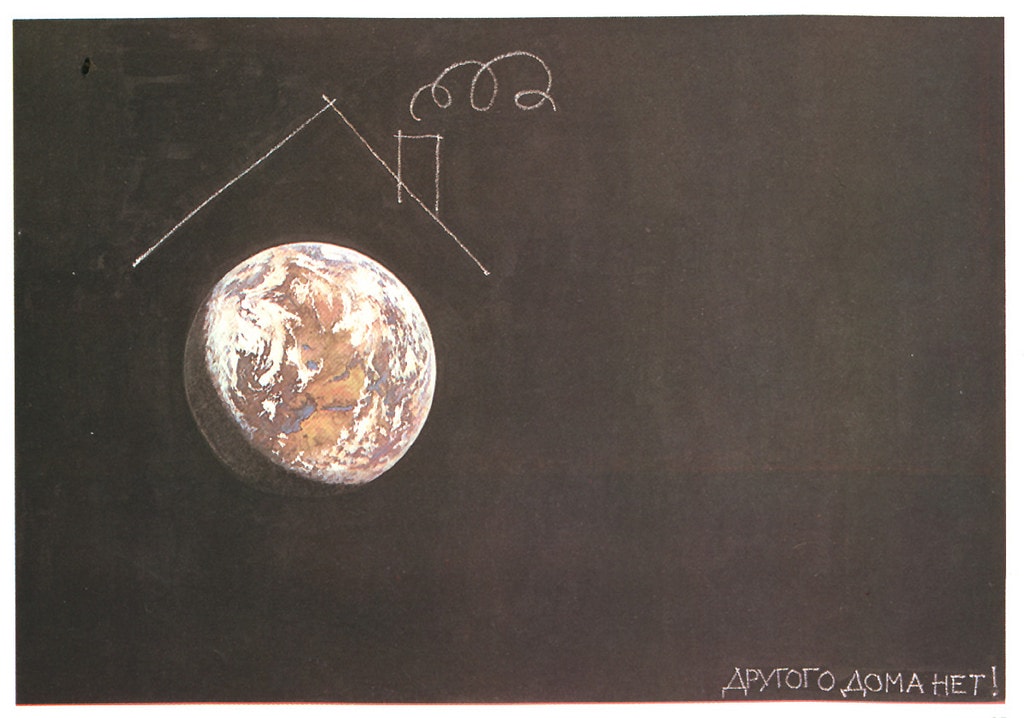

As we provide video and audio translation to those in need, we are, in fact, managing the most human aspect that defines them as a person. By incorporating this innovation in consent, transparency, and designing through an inclusivity approach, we will make sure that in the digital interpreter of tomorrow, their voices will not be muffled but will be amplified.

Editor’s Note: The opinions expressed here by the authors are their own, not those of impakter.com — In the cover: Auto Refinancing — Cover Photo Credit: freepik