In a series of four articles about science, millennial scientist Jesse Zondervan delves into the future of geoscience applications in relation to the rise of globalisation. In his second article he explores the way scientists have dealt with negative impacts of their research, how this has changed and how we might think about it in the future. How do we deal and think about science in a changing world, where the applications of science and technology are growing in scale, complexity and uncertainty?

Scientists are trained to do science, not to make decisions. Makes sense, but what happens if these people are the ones who have the most knowledge in issues that are high-stake to society?

In this article I will explore how scientists have dealt with the negative side effects of their research in the past, and how we might consider such impacts in the 21st century. What approaches do scientists take to dealing with the negative side effects of their research?

In some cases dealing with side effects after they are apparent and already creating trouble may be too late. One such case is Artificial Intelligence, where people are now starting to consider the need for safety research to predict possible destructive side effects that we may not even have imagined.

Increasingly, the solutions and innovations that science and technology provide such as artificial intelligence, virology and geoengineering, are of global impact and are growing in complexity and uncertainty.

How will we navigate this uncertain, important and exciting time of science in a changing world?

A high impact, destructive science application – the scientists behind the nuclear bomb

Credit EUROPEAN MOLECULAR BIOLOGY ORGANIZATION

Credit EUROPEAN MOLECULAR BIOLOGY ORGANIZATION

Wernher von Braun, Fritz Haber, Edward Teller and Sakharov are all twentieth century scientists. What all of them have in common is that they worked on developing military weapons. And it’s quite interesting to think about how they related their science to society:

Wernher von Braun is the first of these scientists to work on the nuclear bomb. He started in Nazi Germany and when he was captured by the Americans, he continued his work in the US. He didn’t care about who was using his technology. He just wanted to work on his science.

Fritz Haber is usually known for his discovery of ammonia, revolutionizing agricultural fertilizers which have had a massive impact on global food provision. However, as a proud partisan Fritz Haber also actively suggested the use of chemical weapons in World War I.

Then you have nuclear physicists Edward Teller and Andrei Sakharov who did not mind working on nuclear weapons, but then spoke out against their proliferation.

These twentieth century scientists all had different models of reflecting and dealing with impacts that their science created. Von Braun applied the neutral stance, where he did not consider any consequences of his science. He isolated himself from society.

Haber on the other hand took active control of how his science could be used, in what he thought would be positive uses. His decision to use chemical weapons makes sense from a twentieth century view of national duty, which I suspect not many modern scientists would harbour (see the previous article in this series).

The stories of these nuclear scientists is one of individual approaches, which failed to prevent the negative outcome that nuclear bombs had when they were first used against Japan, and then led the world into the cold-war.

An early 21st century approach: to publish or not to publish?

Let’s go from the twentieth century to a twenty-first century approach of managing negative side effects of science.

Let’s go from the twentieth century to a twenty-first century approach of managing negative side effects of science.

What if you’re working on a disease to kill off a mice pest and discover a biological weapon that can kill people? This is what stirred the virology community when in 2001 a group of Australian scientists genetically engineered the mousepox to inhibit an immune response in mice, which could kill vaccinated animals. The mousepox is related to the human smallpox virus.

The dilemma is certain: publish and make the technique available to any military or terrorist group that would wish to use it as a weapon? Or keep it secret and hope no one will ever find out?

After worldwide discussion on the pros and cons of poxvirus research, a committee of senior scientists concluded such research needs to be done and published. They also recommended open access to research to provide the research community with knowledge and time to develop counter-measures and to warn the public about impending dangers.

Careful consideration by scientists and interaction with policy makers led to a collective strategic decision on publishing, and a guideline for communicating future similar virological research.

Such a collective and deliberate guideline is a big step forward from the cold war fiasco. Nonetheless, this came about only after the danger to society was fully recognized.

More powerful science requires forward thinking

Photograph: Yonhap/Reuters

Photograph: Yonhap/Reuters

Fortunately, some of the most powerful science applications are now recognized to pose a future danger. We are talking about Artificial Intelligence, or AI for short.

Moore’s Law states that computer power doubles approximately every two years, which is an exponential increase in hardware capabilities At this moment in time we’re seeing the development of AI increasing, and we’re about to see it shooting past human capabilities.

80,000 hours is an organisation that aims at informing people where they can make the most impact out of their career. As part of their research, they investigated the existential risks that we face today, which includes the unintended consequences of AI as outlined by Nick Bostrom in SuperIntelligence.

Photo Credit: 80000 hours

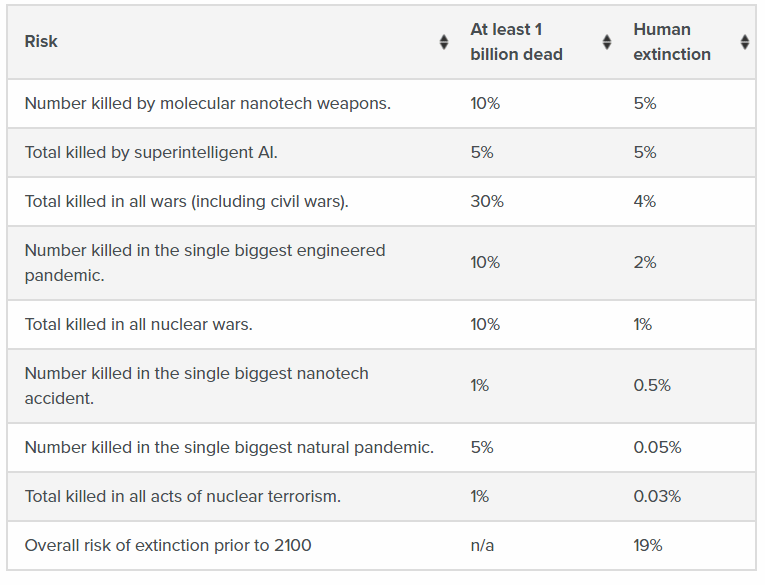

And it’s not just AI, in this table created at a conference in catastrophic risks Anders Sandberg and Nick Bostrom count up the existential risks that we face today. They count up to a 19% chance that we go extinct by 2100.

Technology and application of science is becoming more powerful, and so are the risks associated with them.

The funding that goes into research into safeguarding ourselves against negative consequence of new science applications lags behind this realisation. According to figures reviewed by 80,000 hours, we see 1.5 trillion going into global R&D, 1.3 trillion into luxury goods. How much do we spend on safety research? About 10 million.

I think we are not thinking enough about the potential darker sides of our science and technologies. On top of that, I think it should be the scientists of the disciplines behind the science applications that should do this research. Let me give an example from geoscience and then discuss the role of scientists in high stake, science-loaded issues.

Geoengineering – unknown unknowns?

Photocredit: edward stojakovic

Photocredit: edward stojakovic

Geoscience is hardly nuclear engineering or virology, but as its applications become more powerful, we can start to see some potential dark sides to its use.

One of the interesting things that we’re possibly going to do is geoengineering the climate. Different countries, different regions have different interests. Take for example the effects of the Mount Pinatubo eruption in the 1990s spinning out loads of aerosols into the atmosphere:

It exacerbated drought in the Saharan region, it caused flooding in the US, and it upset monsoons in Asia. What would happen if we were willingly changing the climate?

Imagine that one region wants to lower its temperatures by injecting aerosols into the atmosphere. You can expect negative effects similar to the ones that some regions experienced in the aftermath of the Mount Pinatubo eruption.

This issue also suffers from the bias-effect that globalisation has on science, which I outlined in my previous article. While the geoengineering might be pushed by a UN agenda to limit carbon dioxide concentrations, the negative side effects to poor countries are not well researched. In an article published by Thomson Reuters scientists from the global south speak out their concerns on geoengineering.

Now I hope you can imagine the potential problems that we’re starting to face and the conflicts that could arise over them.

The role of scientists in a post-normal science world

The application of aerosol engineering draws on geoscience knowledge. While the people involved in developing the engineering applications might be engineers, geoscience knowledge required to predict the potential negative side effects of using this technology is held by a wider community of geoscientists.

We need open communication between scientists, engineers and policy makers.

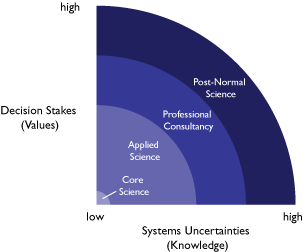

Twenty-five years ago Silvio Funtowicz and Jerry Ravetz developed their concept of “post-normal science”(image left), in which they argue that when stakes are high, decisions urgent and the science complex and uncertain, social consensus and lay-expertise are valued equally or even valued higher then hard scientific evidence.

In short, in some cases policy cannot afford to wait for science to catch up.

We can see many such post-normal issues popping up: artificial intelligence, climate change and geoengineering all fall under the post-normal definition. All of these involve complex, uncertain science lacking clear consensus and all are high-stake to a global society.

I argue the role of scientists is not just providing the science necessary for technological applications. They should also be consulted and offer imaginative insight into potential effects that such applications may have.

Of course, hard science to evaluate the potential threats of technological applications, where existent, would be preferred over imaginative speculation. Even so, I believe involvement of scientists in conversations with policy makers is better than nothing at all. Such conversations should be held with policy makers and representatives from all regions that may be affected.

I can see the proliferation of increasingly powerful geoscience tools, which I will discuss in my next article. As the impact of these applications grow in significance, so do the potential negative consequences. And as some nuclear scientists, virologists and computer scientists may know, time has come to look forward.