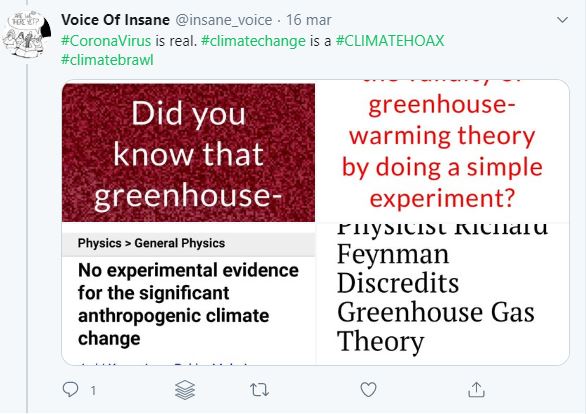

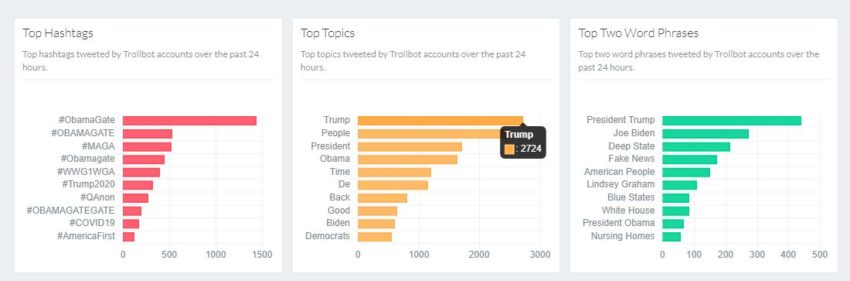

Inauthentic accounts are fake accounts and bots spreading disinformation on social media, especially Twitter. Bot Sentinel is a tool created to find them.

We reached out to Christopher Bouzy, Founder and CEO of Bot Sentinel to learn more about how his system works. This interview revealed the passion he is putting in his project, and his efforts to make social media a less “toxic” place.

Christopher is doing this work despite several threats and attacks he has been receiving throughout the years. Bot Sentinel is also funded mainly from donations.

If you use Twitter but never used Bot Sentinel, perhaps you should. You can either use the extension for your browser or check Twitter users on BotSentinel.com. You will be amazed by the number of inauthentic accounts you will find!

What is your background? When did you decide to build a tool to fight against disinformation and inauthentic accounts?

Christopher Bouzy: I started coding at a young age, I was nine years old when I began, but it was only when I was in my early twenties when I started to do it professionally. My focus back then was on encryption, algorithms, scraping websites, and building bots…good bots!

In 2016, I decided to focus on disinformation and on the issues related to online content. It was hard for the general public to identify inauthentic accounts on social media. Those, the inauthentic accounts, were spreading disinformation, but they were not considered a serious threat at that time.

The 2016 USA Presidential elections confirmed that inauthentic accounts were a significant problem: they were so influential to help swing an election! I was doing some projects with machine learning and artificial intelligence and I wanted to apply my knowledge and skills in the field, to help people fight against disinformation.

Tools available back then were not accurate at all and frequently you could see Twitter users calling each other bots based on the results of these tools. But it was very inaccurate.

I was also noticing that “real” toxic inauthentic accounts and bots were constantly attacking journalists, climate activists, and other public figures that were trying to get their message out.

Bot Sentinel was created for that, to help the online conversation and to make it easier for people to identify what is real and what is fake.

How does Bot Sentinel work? How does it identify inauthentic accounts on Twitter?

C.B.: Other tools might look for automation: how many times an account has tweeted, but it is not a good benchmark because there are people with a lot of free time that tweet hundreds of times per day.

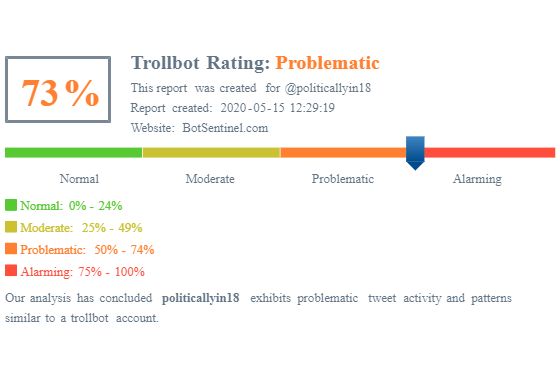

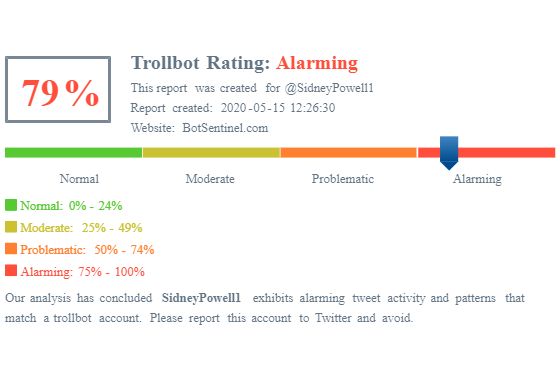

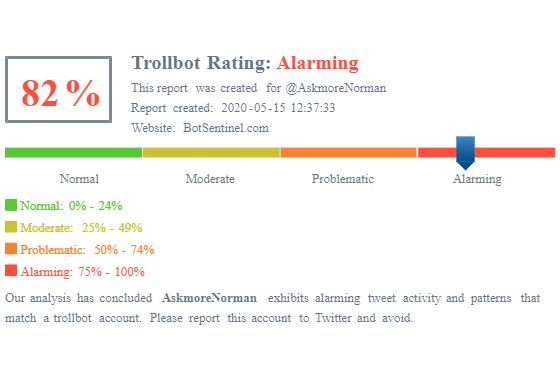

Bot Sentinel, instead, uses Twitter’s terms of service as a guide, to decide if an account should be considered “bad” or not. But while for the average Twitter user being able to identify an inauthentic account is rather easy, the system behind Bot Sentinel needed to learn how to do this automatically because of the large number of accounts on Twitter.

To make the system learn, I started to find accounts that were breaking Twitter rules: they were tweeting disinformation or engaging in target harassment. In short, everything that was clearly against their terms of service.

I took 2,500 “good” accounts and 2,500 “bad accounts” and trained the system with those – machine learning -. This meant the system had to look at over 5 million Tweets.

After that training, the system started to identify by itself the good and the bad accounts. We went on to train the system to get better. Bot Sentinel now looks automatically for certain indicators of what is considered good and bad, and it is learning constantly.

Human language has a lot of nuances, the same words and expressions can have a different meaning depending on the context. The algorithm has to learn this and if you look into different languages, it is a yet bigger problem. So there’s constant retraining of the system for this.

It is now is able to distinguish perfectly between accounts that are tweeting normally and accounts that are “breaking twitter rules”

RELATED ARTICLES: Italy: Politics Shaped by Social Media |Over-Sharenting on Social Media |Using Social Media to Meet Your Needs: Coping |Anti-Establishment Nationalist Wave still Trumps Democrats

This leads to another question: can Bot Sentinel work with different languages other than English?

C.B.: It can. We have trained it with English, but it can learn other languages as well. In the past few weeks, people in Brazil have started to use Bot Sentinel and now the system is learning the language and is able to determine which accounts Tweeting in Portuguese are fake.

I don’t’ know much about Brazilian politics, neither do I speak Portuguese, yet the system does and is able to pick out which are the tweets generated by inauthentic accounts.

We do not control the platform; the platform controls itself and what I mean by that is it’s completely autonomous and it’s constantly learning.

Can users help Bot Sentinel learning other languages then?

C.B.: The best way to do it, is to use it and help the machine learning. We started with English because it takes a lot of work to set up a system like this. We also need more funds than we have available.

Why did you start with Twitter and not with other social media platforms?

C.B.: We wanted to be strong on Twitter before branching out to other platforms, but we do monitor privately Facebook, YouTube, Reddit, and Instagram.

This platform can be used for anything, it is not designed just for Twitter. We started with Twitter because that’s where journalists find news and we also felt some of these inauthentic were able to sway what journalists were reporting.

If I find an inauthentic account with Bot Sentinel, does the report go automatically to Twitter?

C.B.: Right now you have to report it manually. We did not want our platform to spam Twitter with reports; we are still looking for a way to this automatically but without spamming Twitter and getting them upset. We know Twitter is working on a new API that should allow reports to be sent automatically, but until then you still need to report accounts manually.

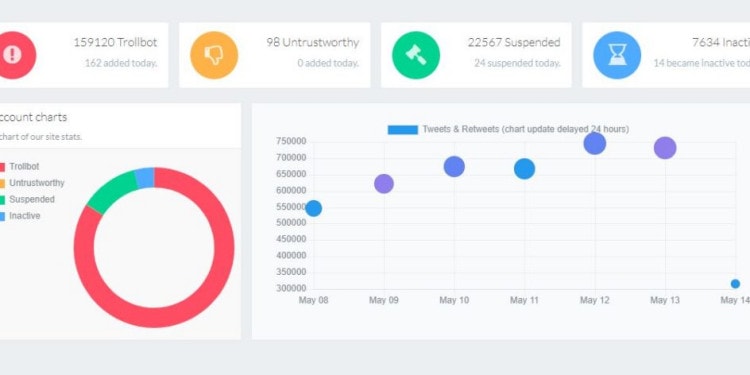

I have seen 27,000 inauthentic accounts spotted by Bot Sentinel got suspended.

C.B.: We are working on a new platform and we are tracking over 98k problematic accounts, they are the most toxic and dangerous accounts we track. Out of the 98k problematic accounts, 27.74% of them were suspended by Twitter. It is an incredibly significant number and a testament to the accuracy of our classification model.

I don’t like to talk negatively about Twitter because they are letting us use their API, but I think inauthentic accounts are a serious problem and it will take multiple companies like mine – and multiple technologies – to sort this out. Ideally, Twitter should work more with us and others trying to fix this.

Why is Twitter not acting, do they have an interest in keeping as many users as possible?

C.B.: They always say that they have more info than what is publicly available and they are actively working to sort this. The reality is that there are still inauthentic accounts spreading disinformation. They cannot deny that this is not happening on their platform.

I see their position and it is hard to draw a line between allowing freedom of speech and blocking tweets that contains “opinions” but are also spreading disinformation.

Just recently, in a tweet, Twitter declared they were not going to allow disinformation related to Covid19 on their platform. I immediately replied to their tweet pointing to several accounts spreading conspiracy theories and urban legends like “Vitamin C” could cure coronavirus.

This shows that because of the high numbers of tweets and accounts, disinformation is hard to control. They do suspend accounts but there is so much content to verify that it is hard for them to do so. Like I said before they need more companies like mine working with them to see this sorted. It is a huge effort.

When using Bot Sentinel extension on my laptop browser, some accounts on Twitter are labeled as untrustworthy. Can you explain that?

C.B.: That got more pushback than anything else because the labeling is a manual process, not automatic. There are accounts that are “real”, but are spreading fake news, and of course, in that case, the labeling could be seen as judging the person Tweeting rather than the content itself.

We have scrapped that from the new version of Bot Sentinel. In the future, I hope to be able to create a panel of people that will have to follow clear guidelines before applying this label to any account.

When will the new version of Bot Sentinel be available?

C.B.: The beta is available now to our donors, and we will release it to the general public by the end of May. We are also working on an algorithm that will be able to detect whether the user is a native speaker of the language he or she is using in the tweets. It’s very complicated! We are always seeking funds to continue our work.

If you wish to support Bot Sentinel and have early access to the new version, you can donate here.

Editor’s Note: The opinions expressed here by Impakter.com contributors are their own, not those of Impakter.com